Доброго времени суток! В этой статье мы поговорим о решении систем нелинейных алгебраических уравнений в Matlab. Вслед за решением нелинейных уравнений, переходим к их системам, рассмотрим несколько методов реализации в Matlab.

- Общая информация

- Оператор Matlab для решения СНАУ

- Метод простых итераций в Matlab для решения СНАУ

- Метод Ньютона в Matlab для решения СНАУ

- Заключение

- fsolve

- Syntax

- Description

- Examples

- Solution of 2-D Nonlinear System

- Solution with Nondefault Options

- Solve Parameterized Equation

- Solve a Problem Structure

- Solution Process of Nonlinear System

- Examine Matrix Equation Solution

- Input Arguments

- fun — Nonlinear equations to solve function handle | function name

- x0 — Initial point real vector | real array

- options — Optimization options output of optimoptions | structure as optimset returns

- Fsolve matlab система нелинейных уравнений

- 🎬 Видео

Общая информация

Итак, в прошлой статье мы рассмотрели нелинейные уравнения и теперь необходимо решить системы таких уравнений. Система представляет собой набор нелинейных уравнений (их может быть два или более), для которых иногда возможно найти решение, которое будет подходить ко всем уравнениям в системе.

В стандартном виде, количество неизвестных переменных равно количеству уравнений в системе. Необходимо найти набор неизвестных переменных, которые при подставлении в уравнения будут приближать значение уравнения к 0. Иногда таких наборов может быть несколько, даже бесконечно много, а иногда решений не существует.

Чтобы решить СНАУ, необходимо воспользоваться итеративными методами. Это методы, которые за определенное количество шагов получают решение с определенной точностью. Также очень важно при решении задать достаточно близкое начальное приближение, то есть такой набор переменных, которые близки к решению. Если решается система из 2 уравнений, то приближение находится с помощью построение графика двух функций.

Далее, мы рассмотрим стандартный оператор Matlab для решения систем нелинейных алгебраических уравнений, а также напишем метод простых итераций и метод Ньютона.

Оператор Matlab для решения СНАУ

В среде Matlab существует оператор fsolve, который позволяет решить систему нелинейных уравнений. Сразу рассмотрим задачу, которую, забегая вперед, решим и другими методами для проверки.

Решить систему нелинейных уравнений с точность 10 -2 :

cos(x-1) + y = 0.5

x-cos(y) = 3

Нам дана система из 2 нелинейных уравнений и сначала лучше всего построить график. Воспользуемся командой ezplot в Matlab, только не забудем преобразовать уравнения к стандартному виду, где правая часть равна 0:

Функция ezplot строит график, принимая символьную запись уравнения, а для задания цвета и толщины линии воспользуемся функцией set. Посмотрим на вывод:

Как видно из графика, есть одно пересечение функций — то есть одно единственное решение данной системы нелинейных уравнений. И, как было сказано, по графику найдем приближение. Возьмем его как (3.0, 1.0). Теперь найдем решение с его помощью:

Создадим функцию m-файлом fun.m и поместим туда следующий код:

Заметьте, что эта функция принимает вектор приближений и возвращает вектор значений функции. То есть, вместо x здесь x(1), а вместо y — x(2). Это необходимо, потому что fsolve работает с векторами, а не с отдельными переменными.

И наконец, допишем функцию fsolve к коду построения графика таким образом:

Таким образом у нас образуется два m-файла. Первый строит график и вызывает функцию fsolve, а второй необходим для расчета самих значений функций. Если вы что-то не поняли, то в конце статьи будут исходники.

И в конце, приведем результаты:

xr (это вектор решений) =

3.3559 1.2069

fr (это значения функций при таких xr, они должны быть близки к 0) =

1.0e-09 *

0.5420 0.6829

ex (параметр сходимости, если он равен 1, то все сошлось) =

1

И, как же без графика с ответом:

Метод простых итераций в Matlab для решения СНАУ

Теперь переходим к методам, которые запрограммируем сами. Первый из них — метод простых итераций. Он заключается в том, что итеративно приближается к решению, конечно же, с заданной точностью. Алгоритм метода достаточно прост:

- Если возможно, строим график.

- Из каждого уравнения выражаем неизвестную переменную след. образом: из 1 уравнения выражаем x1, из второго — x2, и т.д.

- Выбираем начальное приближение X0, например (3.0 1.0)

- Рассчитываем значение x1, x2. xn, которые получили на шаге 2, подставив значения из приближения X0.

- Проверяем условие сходимости, (X-X0) должно быть меньше точности

- Если 5 пункт не выполнился, то повторяем 4 пункт.

И перейдем к практике, тут станет все понятнее.

Решить систему нелинейных уравнений методом простых итераций с точность 10 -2 :

cos(x-1) + y = 0.5

x-cos(y) = 3

График мы уже строили в предыдущем пункте, поэтому переходим к преобразованию. Увидим, что x из первого уравнения выразить сложно, поэтому поменяем местами уравнения, это не повлияет на решение:

x-cos(y) = 3

cos(x-1) + y = 0.5

Далее приведем код в Matlab:

В этой части мы выразили x1 и x2 (у нас это ‘x’ и ‘y’) и задали точность.

В этой части в цикле выполняются пункты 4-6. То есть итеративно меняются значения x и y, пока отличия от предыдущего значения не станет меньше заданной точности.

k = 10

x = 3.3587

y = 1.2088

Как видно, результаты немного отличаются от предыдущего пункта. Это связано с заданной точностью, можете попробовать поменять точность и увидите, что результаты станут такими же, как и при решении стандартным методом Matlab.

Метод Ньютона в Matlab для решения СНАУ

Решение систем нелинейных уравнений в Matlab методом Ньютона является более эффективным, чем использование метода простых итераций. Сразу же представим алгоритм, а затем перейдем к реализации.

- Если возможно, строим график.

- Выбираем начальное приближение X0, например (3.0 1.0)

- Рассчитываем матрицу Якоби w, это матрица частных производных каждого уравнения, считаем ее определитель для X0.

- Находим вектор приращений, который рассчитывается как dx = -w -1 * f(X0)

- Находим вектор решения X = X0 + dx

- Проверяем условие сходимости, (X-X0) должно быть меньше точности

Далее, решим тот же пример, что и в предыдущих пунктах. Его график мы уже строили и начальное приближение останется таким же.

Решить систему нелинейных уравнений методом Ньютона с точность 10 -2 :

cos(x-1) + y = 0.5

x-cos(y) = 3

Перейдем к коду:

Сначала зададим начальное приближение. Затем необходимо просчитать матрицу Якоби, то есть частные производные по всем переменным. Воспользуемся символьным дифференцированием в Matlab, а именно командой diff с использованием символьных переменных.

Далее, сделаем первую итерацию метода, чтобы получить вектор выходных значений X, а потом уже сравнивать его с приближением в цикле.

В этой части кода выполняем первую итерацию, чтобы получить вектор решения и сравнивать его с вектором начального приближения. Отметим, чтобы посчитать значение символьной функции в Matlab, необходимо воспользоваться функцией subs. Эта функция заменяет переменную на числовое значение. Затем функция double рассчитает это числовое значение.

Все действия, которые были выполнены для расчета производных, на самом деле можно было не производить, а сразу же задать производные. Именно так мы и поступим в цикле.

В этой части кода выполняется цикл по расчету решения с заданной точностью. Еще раз отметим, что если в первой итерации до цикла были использованы функции diff, double и subs для вычисления производной в Matlab, то в самом цикле матрица якоби была явно задана этими частными производными. Это сделано, чтобы показать возможности среды Matlab.

За 3 итерации достигнут правильный результат. Также важно сказать, что иногда такие методы могут зацикливаться и не закончить расчеты. Чтобы такого не было, мы прописали проверку на количество итераций и запретили выполнение более 100 итераций.

Заключение

В этой статье мы познакомились с основными понятиями систем нелинейных алгебраических уравнений в Matlab. Рассмотрели несколько вариантов их решения, как стандартными операторами Matlab, так и запрограммированными методами простых итераций и Ньютона.

Видео:1 - Решение систем нелинейных уравнений в MatlabСкачать

fsolve

Solve system of nonlinear equations

Видео:После этого видео, ТЫ РЕШИШЬ ЛЮБУЮ Систему Нелинейных УравненийСкачать

Syntax

Видео:MatLab. 8.8. Решение большой системы нелинейных уравненийСкачать

Description

Nonlinear system solver

Solves a problem specified by

for x, where F( x) is a function that returns a vector value.

x is a vector or a matrix; see Matrix Arguments.

x = fsolve( fun , x0 ) starts at x0 and tries to solve the equations fun(x) = 0, an array of zeros.

Note

Passing Extra Parameters explains how to pass extra parameters to the vector function fun(x) , if necessary. See Solve Parameterized Equation.

x = fsolve( fun , x0 , options ) solves the equations with the optimization options specified in options . Use optimoptions to set these options.

x = fsolve( problem ) solves problem , a structure described in problem .

[ x , fval ] = fsolve( ___ ) , for any syntax, returns the value of the objective function fun at the solution x .

[ x , fval , exitflag , output ] = fsolve( ___ ) additionally returns a value exitflag that describes the exit condition of fsolve , and a structure output with information about the optimization process.

[ x , fval , exitflag , output , jacobian ] = fsolve( ___ ) returns the Jacobian of fun at the solution x .

Видео:Метод Ньютона (метод касательных) Пример РешенияСкачать

Examples

Solution of 2-D Nonlinear System

This example shows how to solve two nonlinear equations in two variables. The equations are

Convert the equations to the form

Write a function that computes the left-hand side of these two equations.

Save this code as a file named root2d.m on your MATLAB® path.

Solve the system of equations starting at the point [0,0] .

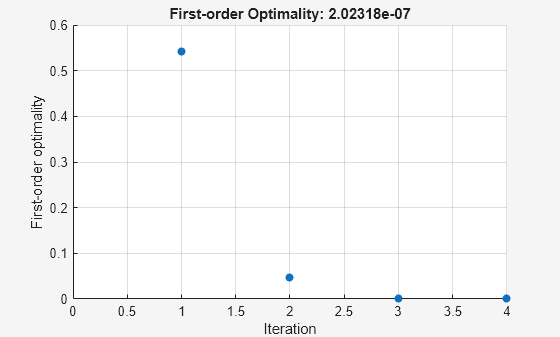

Solution with Nondefault Options

Examine the solution process for a nonlinear system.

Set options to have no display and a plot function that displays the first-order optimality, which should converge to 0 as the algorithm iterates.

The equations in the nonlinear system are

Convert the equations to the form

Write a function that computes the left-hand side of these two equations.

Save this code as a file named root2d.m on your MATLAB® path.

Solve the nonlinear system starting from the point [0,0] and observe the solution process.

Solve Parameterized Equation

You can parameterize equations as described in the topic Passing Extra Parameters. For example, the paramfun helper function at the end of this example creates the following equation system parameterized by c :

2 x 1 + x 2 = exp ( c x 1 ) — x 1 + 2 x 2 = exp ( c x 2 ) .

To solve the system for a particular value, in this case c = — 1 , set c in the workspace and create an anonymous function in x from paramfun .

Solve the system starting from the point x0 = [0 1] .

To solve for a different value of c , enter c in the workspace and create the fun function again, so it has the new c value.

This code creates the paramfun helper function.

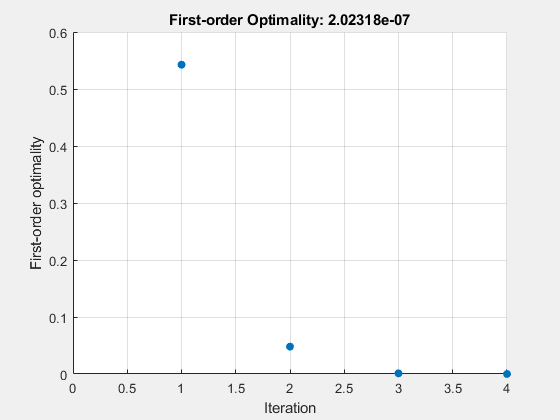

Solve a Problem Structure

Create a problem structure for fsolve and solve the problem.

Solve the same problem as in Solution with Nondefault Options, but formulate the problem using a problem structure.

Set options for the problem to have no display and a plot function that displays the first-order optimality, which should converge to 0 as the algorithm iterates.

The equations in the nonlinear system are

Convert the equations to the form

Write a function that computes the left-hand side of these two equations.

Save this code as a file named root2d.m on your MATLAB® path.

Create the remaining fields in the problem structure.

Solve the problem.

Solution Process of Nonlinear System

This example returns the iterative display showing the solution process for the system of two equations and two unknowns

2 x 1 — x 2 = e — x 1 — x 1 + 2 x 2 = e — x 2 .

Rewrite the equations in the form F ( x ) = 0 :

2 x 1 — x 2 — e — x 1 = 0 — x 1 + 2 x 2 — e — x 2 = 0 .

Start your search for a solution at x0 = [-5 -5] .

First, write a function that computes F , the values of the equations at x .

Create the initial point x0 .

Set options to return iterative display.

Solve the equations.

The iterative display shows f(x) , which is the square of the norm of the function F(x) . This value decreases to near zero as the iterations proceed. The first-order optimality measure likewise decreases to near zero as the iterations proceed. These entries show the convergence of the iterations to a solution. For the meanings of the other entries, see Iterative Display.

The fval output gives the function value F(x) , which should be zero at a solution (to within the FunctionTolerance tolerance).

Examine Matrix Equation Solution

Find a matrix X that satisfies

X * X * X = [ 1 2 3 4 ] ,

starting at the point x0 = [1,1;1,1] . Create an anonymous function that calculates the matrix equation and create the point x0 .

Set options to have no display.

Examine the fsolve outputs to see the solution quality and process.

The exit flag value 1 indicates that the solution is reliable. To verify this manually, calculate the residual (sum of squares of fval) to see how close it is to zero.

This small residual confirms that x is a solution.

You can see in the output structure how many iterations and function evaluations fsolve performed to find the solution.

Видео:Компьютерное моделирование - Решение систем нелинейных уравненийСкачать

Input Arguments

fun — Nonlinear equations to solve

function handle | function name

Nonlinear equations to solve, specified as a function handle or function name. fun is a function that accepts a vector x and returns a vector F , the nonlinear equations evaluated at x . The equations to solve are F = 0 for all components of F . The function fun can be specified as a function handle for a file

where myfun is a MATLAB ® function such as

fun can also be a function handle for an anonymous function.

fsolve passes x to your objective function in the shape of the x0 argument. For example, if x0 is a 5-by-3 array, then fsolve passes x to fun as a 5-by-3 array.

If the Jacobian can also be computed and the ‘SpecifyObjectiveGradient’ option is true , set by

the function fun must return, in a second output argument, the Jacobian value J , a matrix, at x .

If fun returns a vector (matrix) of m components and x has length n , where n is the length of x0 , the Jacobian J is an m -by- n matrix where J(i,j) is the partial derivative of F(i) with respect to x(j) . (The Jacobian J is the transpose of the gradient of F .)

Example: fun = @(x)x*x*x-[1,2;3,4]

Data Types: char | function_handle | string

x0 — Initial point

real vector | real array

Initial point, specified as a real vector or real array. fsolve uses the number of elements in and size of x0 to determine the number and size of variables that fun accepts.

Example: x0 = [1,2,3,4]

Data Types: double

options — Optimization options

output of optimoptions | structure as optimset returns

Optimization options, specified as the output of optimoptions or a structure such as optimset returns.

Some options apply to all algorithms, and others are relevant for particular algorithms. See Optimization Options Reference for detailed information.

Some options are absent from the optimoptions display. These options appear in italics in the following table. For details, see View Options.

| All Algorithms | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Algorithm | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| CheckGradients | Compare user-supplied derivatives (gradients of objective or constraints) to finite-differencing derivatives. The choices are true or the default false . For optimset , the name is DerivativeCheck and the values are ‘on’ or ‘off’ . See Current and Legacy Option Names. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Diagnostics | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| DiffMaxChange | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| DiffMinChange | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| FiniteDifferenceStepSize | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| FiniteDifferenceType | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| FunctionTolerance | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| FunValCheck | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| MaxFunctionEvaluations | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| MaxIterations | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| OptimalityTolerance | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| SpecifyObjectiveGradient | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| StepTolerance | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| UseParallel | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| trust-region Algorithm | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| JacobianMultiplyFcn | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| JacobPattern | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| MaxPCGIter | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| PrecondBandWidth | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| SubproblemAlgorithm | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Levenberg-Marquardt Algorithm | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| InitDamping | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| ScaleProblem | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| fun | The nonlinear system of equations to solve. fun is a function that accepts a vector x and returns a vector F , the nonlinear equations evaluated at x . The function fun can be specified as a function handle. where myfun is a MATLAB function such as fun can also be an inline object. If the Jacobian can also be computed and the Jacobian parameter is ‘on’ , set by then the function fun must return, in a second output argument, the Jacobian value J , a matrix, at x . Note that by checking the value of nargout the function can avoid computing J when fun is called with only one output argument (in the case where the optimization algorithm only needs the value of F but not J ). If fun returns a vector (matrix) of m components and x has length n , where n is the length of x0 , then the Jacobian J is an m-by-n matrix where J(i,j) is the partial derivative of F(i) with respect to x(j) . (Note that the Jacobian J is the transpose of the gradient of F .) |

| options | Options provides the function-specific details for the options parameters. |

| exitflag | Describes the exit condition: | ||

| > 0 | The function converged to a solution x . | ||

| 0 | The maximum number of function evaluations or iterations was exceeded. | ||

| output | Structure containing information about the optimization. The fields of the structure are: | ||

| iterations | Number of iterations taken. | ||

| funcCount | Number of function evaluations. | ||

| algorithm | Algorithm used. | ||

| cgiterations | Number of PCG iterations (large-scale algorithm only). | ||

| stepsize | Final step size taken (medium-scale algorithm only). | ||

| firstorderopt | Measure of first-order optimality (large-scale algorithm only). For large scale problems, the first-order optimality is the infinity norm of the gradient g = J T F (see Nonlinear Least-Squares). | ||

| LargeScale | Use large-scale algorithm if possible when set to ‘on’ . Use medium-scale algorithm when set to ‘off’ . The default for fsolve is ‘off’ . |

| Diagnostics | Print diagnostic information about the function to be minimized. |

| Display | Level of display. ‘off’ displays no output; ‘iter’ displays output at each iteration; ‘final’ (default) displays just the final output. |

| Jacobian | If ‘on’ , fsolve uses a user-defined Jacobian (defined in fun ), or Jacobian information (when using JacobMult ), for the objective function. If ‘off’ , fsolve approximates the Jacobian using finite differences. |

| MaxFunEvals | Maximum number of function evaluations allowed. |

| MaxIter | Maximum number of iterations allowed. |

| TolFun | Termination tolerance on the function value. |

| TolX | Termination tolerance on x . |

| JacobMult | Function handle for Jacobian multiply function. For large-scale structured problems, this function computes the Jacobian matrix products J*Y , J’*Y , or J’*(J*Y) without actually forming J . The function is of the form | |

| where Jinfo and the additional parameters p1,p2. contain the matrices used to compute J*Y (or J’*Y , or J’*(J*Y) ). The first argument Jinfo must be the same as the second argument returned by the objective function fun . The parameters p1,p2. are the same additional parameters that are passed to fsolve (and to fun ). Y is a matrix that has the same number of rows as there are dimensions in the problem. flag determines which product to compute. If flag == 0 then W = J’*(J*Y) . If flag > 0 then W = J*Y . If flag then W = J’*Y . In each case, J is not formed explicitly. fsolve uses Jinfo to compute the preconditioner.

See Nonlinear Minimization with a Dense but Structured Hessian and Equality Constraints for a similar example. | ||

| JacobPattern | Sparsity pattern of the Jacobian for finite-differencing. If it is not convenient to compute the Jacobian matrix J in fun , lsqnonlin can approximate J via sparse finite-differences provided the structure of J — i.e., locations of the nonzeros — is supplied as the value for JacobPattern . In the worst case, if the structure is unknown, you can set JacobPattern to be a dense matrix and a full finite-difference approximation is computed in each iteration (this is the default if JacobPattern is not set). This can be very expensive for large problems so it is usually worth the effort to determine the sparsity structure. | |

| MaxPCGIter | Maximum number of PCG (preconditioned conjugate gradient) iterations (see the Algorithm section below). | |

| PrecondBandWidth | Upper bandwidth of preconditioner for PCG. By default, diagonal preconditioning is used (upper bandwidth of 0). For some problems, increasing the bandwidth reduces the number of PCG iterations. | |

| TolPCG | Termination tolerance on the PCG iteration. | |

| TypicalX | Typical x values. |

| DerivativeCheck | Compare user-supplied derivatives (Jacobian) to finite-differencing derivatives. |

| DiffMaxChange | Maximum change in variables for finite-differencing. |

| DiffMinChange | Minimum change in variables for finite-differencing. |

| NonlEqnAlgorithm | Choose Levenberg-Marquardt or Gauss-Newton over the trust-region dogleg algorithm. |

| LineSearchType | Line search algorithm choice. |